What is DeepSeek’s real impact on AI, and what does it mean for investors?

In recent weeks, there’s been widespread speculation about the potential impact of DeepSeek, a Chinese artificial intelligence (AI) model, on the AI ecosystem. This led to significant movements in stock prices. However, we believe that key parts of the narrative related to DeepSeek are off the mark. Here’s why:

The hype and the reality

Recent headlines about DeepSeek have sparked intense debate in the investment community, specifically around the idea that it signals the end of US AI dominance and could dramatically reduce demand for AI infrastructure. We believe that much of this narrative is either wrong or, at least, focused on the wrong aspects. The issues surrounding DeepSeek are deeply technical and require a nuanced understanding of both AI technology and business strategy.

One key point of contention is the cost of training DeepSeek’s model. The claim is that it costs only $6 million to train DeepSeek’s latest large language model (LLM)on a relatively small number of graphics processing units (GPUs), while matching the performance of industry leaders like OpenAI’s GPT-4 and Anthropic’s Claude.

However, we believe this figure does not capture the full extent of the costs involved. For example, this number doesn’t reflect the cost of employing top AI researchers or the value of building on existing research from leading AI labs. There is also concern that DeepSeek may have underestimated the number – and therefore the total cost – of GPUs used in the process. One way to think about it is like claiming you built a car for $1,000 while leaving out the cost of the factory, engineers and decades of automotive research.

The real innovation: reasoning models

While much of the focus has been on the cost of training, the real innovation lies in DeepSeek’s reasoning model, R1. Reasoning models are AI systems that can break down complex problems into steps and are an advance beyond standard LLM prompting. DeepSeek appears to have demonstrated that creating reasoning models is not as difficult or expensive as previously thought. While it may not be as hard to construct a reasoning model, making it work with high accuracy matters – and DeepSeek’s performance currently does not seem to match that of OpenAI or Google. It also appears that DeepSeek’s R1 model makes up answers at a significantly higher rate than comparable reasoning and open-source models.1

More broadly, as investors this innovation is significant because it suggests that reasoning models could become more available and common, driving increased demand for AI infrastructure. This is because reasoning models, while more efficient in some ways, require significantly more computing power than basic AI models. Moreover, as AI becomes cheaper and more capable, usage tends to increase dramatically. Companies are finding new ways to use AI that require more, not less, computing power. This follows a pattern economists call Jevons Paradox – when technology becomes more efficient, people often end up using more of it.

Quality and performance, not cost, will drive AI adoption

To truly understand the implications of DeepSeek, we need to consider both the quality and performance of AI models. The narrative around DeepSeek has focused heavily on cost, but the real driver of AI adoption is the improvement in quality and performance. As AI models become more capable and efficient, they will be used in a wider range of applications, driving demand for AI infrastructure.

Reasoning models represent a significant leap in AI capabilities. These models are not only more accurate but also more versatile, capable of handling complex tasks that standard LLMs cannot. From the point of view of capital expenditure (capex), this increased capability drives a higher level of required AI infrastructure capex. Specifically, reasoning models are more compute-intensive, requiring more AI hardware, cooling and energy, among other things.

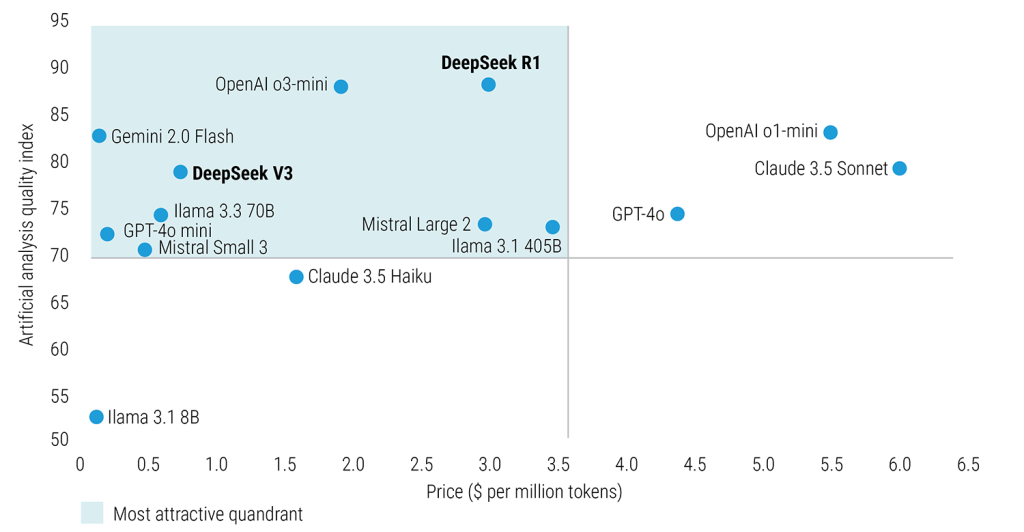

Quality matters as competition pushes down the price of AI models

Quality versus price

Source: Analysis, as of 10 February 2025. Artificial Analysis Quality Index: Average result across our evaluations covering different dimensions of model intelligence. Currently includes MMLU, GPQA, Math & Human Eval. OpenAI o1 model figures are preliminary and based on figures stated by OpenAI. Price per token, represented as USD per million tokens. Price is a blend of input and output token prices (3:1 ratio).

However, the DeepSeek discussion raises a concern about whether AI model development itself is a “good business”. Companies like Meta and DeepSeek are willing to open source – ie, ‘give away’ – their AI models because they make money in other ways. But major technology companies aren’t planning on scaling back their AI investments in response to DeepSeek. This is because AI is viewed as a transformative technology that will penetrate virtually every sector of the economy, from health care and drug discovery to automotive and financial services. Tech companies view current investments as necessary to capture these future opportunities. This is similar to how airlines often struggle to make profits, but airplane manufacturers and airport operators can still run profitable businesses.

The bottom line

The best way to understand DeepSeek is as part of many innovations that are quickly improving the performance, while lowering the cost, of AI. Most of these innovations go unnoticed by investors and the public at large. DeepSeek was noticed and caused an intense market reaction. But within the context of developments of AI we should understand it as evolutionary. And taking a step back, the real story is about making AI more capable and accessible, which historically leads to increased demand for computing power. As such, we believe the broader AI infrastructure ecosystem remains well-positioned for growth. For long-term investors, these moments of uncertainty may create opportunities, particularly while maintaining a diversified approach to AI exposure.